I’m busy working on some SQL Server to Couchbase Server blog posts, but in the meantime, I’d thought I’d leave you a little chestnut of JSON goodness for the Christmas weekend.

Wikibase has a huge database of information. All kinds of information. And it’s available to download in JSON format. Well, I’m not exactly sure what I’m going to do with it yet, but I thought it would be useful to import that data into Couchbase so I can run some N1QL queries on it.

To do that, I’m going to use cbimport.

Getting the Wikibase data

Wikibase downloads are available in JSON, XML, and RDF.

The file I downloaded was wikidata-20161219-all.json.bz2, which is over 6gb in size. I uncompressed it to wikidata-20161219-all.json, which is almost 100gb. This is a lot of data packed in to one file.

Within the file, the data is structured as one big JSON array containing JSON objects. My goal was to create a Couchbase document for each JSON object in that array.

Using cbimport

cbimport is a handy utility to import data (JSON and CSV) that comes with Couchbase Server.

You are required to tell this command line tool:

-

json or csv ⇒ What type of file you are importing

-

-c ⇒ Where your cluster is

-

-b ⇒ The name of the bucket you want to import to

-

-u and -p ⇒ Cluster credentials

-

-d ⇒ The URL to the dataset to import (since my file is local, I use a

file://URL)

I also used these options:

-

–generate-key ⇒ This tells cbimport how to construct the key for each document. If each document has an

idfield, for instance, I could specify a template of%id%to use that as the key -

–format list ⇒ This tells cbimport what format the file is in. Some options are Lines, List, Sample. I used “List” because the JSON is all in one file, but it’s not one JSON object per line.

Using cbimport on Wikibase data

I have Couchbase Server installed on drive E. From the folder where the wikidata json file is (mine is called wikidata-20161219-all.json, but yours may differ) I ran:

E:CouchbaseServerbincbimport.exe json -c couchbase://localhost -u Administrator -p password -b wikibase file://wikidata-20161219-all.json --generate-key %id% --format list

Based on the Wikibase data model documentation, I knew that there would be an id field in each item with a unique value. That’s why I used %id%. A more complex key can be generated with the relatively robust key generator templates that cbimport offers.

While cbimport ran, I carefully monitored the memory usage of cbimport, since I was afraid it would have a problem with the huge dataset. But no problem, it didn’t exceed 21mb of RAM usage while it was running.

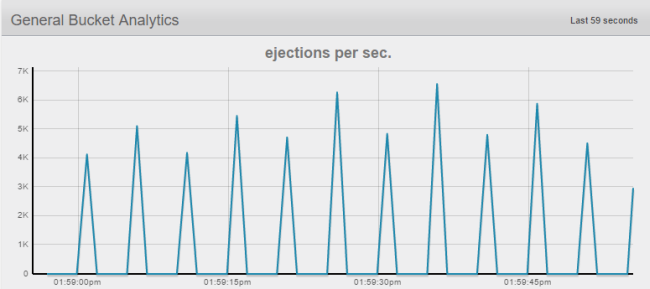

I started with 512mb of RAM and moved to 924mb of RAM to my bucket in Couchbase during the import. I only have one node. So, I expected this to mean that a lot of ejections from the cache would take place. That is what happened.

The total file is 99gb, so there’s no way it could all fit in RAM on my desktop. In production, 99+gb wouldn’t be unrealistic to fit into RAM with a handful of nodes. As wikibase continues to grow, it could be accomodated by Couchbase’s easy scaling: just rack up another server and keep going.

This takes a long time to run on my desktop. In fact, as I write this blog post, it’s still running. It’s up to 5.2 million documents and going (I don’t know how many records there are in total, but disk usage is currently at 9.5gb, so I think I have a long way to go).

When it’s done, I hope to be able to run some interesting N1QL queries against this data (there are some examples that Wikibase gives that use a “Gremlin” query engine instead of SQL).

Summary

If you are interested in working with Wikibase’s data or any large repository of data that’s already in JSON format, it’s very easy to bring it to Couchbase with cbimport.

Now that I have a large data set from Wikibase, my next goal will be to figure out some interesting things that I could use N1QL to query from the data.

If you have any questions, leave a comment or talk to me on Twitter.

Hi Matthew,

Great article, just wanted to make a comment on how cbimport runs on different operating systems, since there are slight differences. It also appears that slash characters disappeared from the command, which should look like the following (let’s hope they don’t get killed in the comment, too):

E:\Couchbase\Server\bin\cbimport.exe json -c couchbase://localhost -u Administrator -p password -b wikibase file://wikidata-20161219-all.json –generate-key %id% –format list

So, how to run cbimport on Mac OS, Windows, and Linux? Let’s assume we’re loading documents into “default” bucket from a CSV-formatted file “test.csv” while logged in as “test.user”. The CSV file has EXPERIMENT_ID column; we’ll use its values as document keys. Couchbase is running on the localhost (127.0.0.1).

Mac OS (from the terminal window):

>> cd /Applications/Couchbase\ Server.app/Contents/Resources/couchbase-core/bin

>> ./cbimport csv -c couchbase://127.0.0.1:8091 -u Administrator -p password -b default -d file:///Users/test.user/Desktop/test.csv -g csv::%EXPERIMENT_ID%

NOTE the three slash characters in “file:///” protocol prefix, unless the file is located in the current directory (then it is “file://test.csv”).

Windows (from the command prompt):

>> cd “C:\Program Files\Couchbase\Server\bin”

>> cbimport.exe csv -c couchbase://127.0.0.1:8091 -u Administrator -p password -b default -d file://C:/Users/test.user/Desktop/test.csv -g csv::%EXPERIMENT_ID%

NOTE the two slash characters in “file://” protocol prefix.

Ubuntu Linux (from the terminal window):

>> cd /opt/couchbase/bin

>> ./cbimport csv -c couchbase://127.0.0.1:8091 -u Administrator -p password -b default -d file:///home/test.user/test.csv -g csv::%EXPERIMENT_ID%

NOTE the three slash characters in “file:///” protocol prefix, unless the file is located in the current directory (then it is “file://test.csv”).

[…] Using cbimport to import Wikibase data to JSON documents […]