Couchbase further improves high availability for mission-critical deployments and reduces operator intervention. Couchbase enhances the detection of common disk failures and automatically fails over the node with bad disks saving operators time and energy. It also handles multiple server failures based on the replica count to avoid data loss, and can fail over an entire server group if a rack or zone is not available.

Users can now configure automatic failover for disk, multiple node and entire server group (rack-zone) in Couchbase Server 5.5.

Let’s take a look at each of those enhancements in detail.

Auto-FailOver on Disk Issues

Prior to Couchbase Server 5.5, cluster manager did not automatically failover when it encounters disk related issues on a node. Node continues to operate for a while, till it runs out of memory or encounters some other issue.

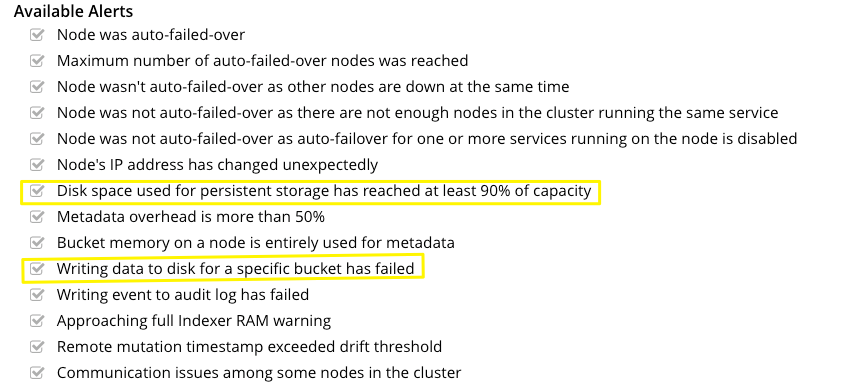

However, cluster manager has built-in alerts for “when disk failure is detected while persisting data to disk” and “Disk space used for persistent storage has reached at least 90% of capacity”.

In Couchbase Server 5.5, users will be able to configure following settings on the Node Availability Settings in the Couchbase Web Console or via the CLI/REST API.

-

- Enable auto-failover for sustained data disk read/write failures

- Enable node auto-failover to turn on this feature

- It will be off by default.

- The time period in seconds

- Minimum can set to 5 secs, maximum to 3600 secs and the default is 120 secs.

- Enable auto-failover for sustained data disk read/write failures

How does it work?

Couchbase Cluster manager keeps monitoring two stats –

- Number of failures while trying to write items to the disk.

- Number of failures while trying to read from a disk.

If this stats continue to increase for the duration of the auto-failover timeout and “auto-failover on disk issues” is turned on, then the cluster manager will automatically failover the node.

Above stats are available on Statistics page as –

- # disk read failures

- # disk write failures

Note – It is possible for one stat to increase and not the other. E.g. when the disk is full, the writes will fail causing “write failures” to grow but reads can still go through.

Auto-FailOver More Than One Node

Prior to Couchbase Server 5.5, the auto-failover count or the quota is set to 1 i.e. only one node can be automatically failed over before requiring user intervention. This was constraint to prevent a chain reaction failure of multiple or all nodes in the cluster.

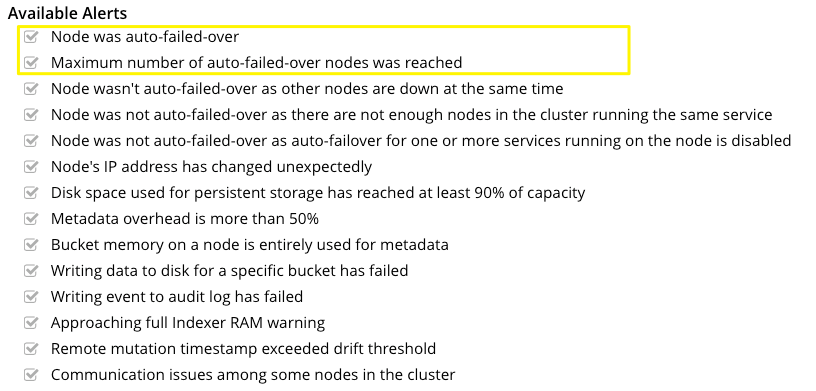

However, cluster manager did provide built-in alerting “Node was auto-failed-over” on the first node and “Maximum number of auto-failed-over nodes was reached”.

In Couchbase Server 5.5, users will be able to configure failover of multiple instance if all the buckets in the cluster are configured with > 1 replicas in which case maximum of 3 instances can be failed over automatically without requiring manual intervention such as resetting the auto-failover quota.

Users can now configure following settings on the Node Availability Settings in the Couchbase Web Console or via the CLI/REST API.

-

- Enable auto-failover for up to default 1 event and maximum of 3 events

- This will enable the feature and can be turned on only when auto-failover is enabled.

- It will be off by default.

- The time period in seconds

- Minimum can set to 5 secs, maximum to 3600 secs and default is 120 secs.

- Can edited by user anytime

- Enable auto-failover for up to default 1 event and maximum of 3 events

How does it work?

Cluster manager validates number of configured replicas for all the buckets in the cluster. In case of different buckets with different configured replicas cluster manager will only consider maximum replicas configured across all buckets.

For example – If a cluster has

-

- one bucket with 1 replica and another with 2 replicas, then allow auto-failover of up to only 1 node.

- two buckets with 2 replicas, then allow auto-failover of up to 2 nodes.

- three buckets with 2 replicas, 2 replicas and 3 replicas configured, then allow auto-failover of up to 2 nodes.

Maximum auto-failover quota applies to all nodes in the cluster including Data, Query, Index and Search nodes.

If two or more nodes fail at the exact same time then auto-failover will not work (irrespective of the max count set by the user) with exception of server group auto-failover discussed later. This constraint is in place to prevents a network partition from causing two or more halves of a cluster from failing each other over. It protects data integrity and consistency.

Note – When multiple nodes fails that will increase load on the cluster. Users who want to allow auto-failover of more than one node, need to make sure that they have sufficient capacity to handle failures.

Auto-FailOver Server Groups (Rack-Zone Awareness)

Rack-Zone Awareness enables logical groupings of servers on a cluster where each server group physically belongs to a rack or Availability Zone.

In Couchbase Server 5.5, users will be able to configure following settings on the Node Availability Settings in the Couchbase Web Console or via the CLI/REST API.

- Enable auto-failover of server groups

- Enable node auto-failover to turn on this feature

- It will be off by default.

How does it work?

For auto-failover of server groups to work –

- Clusters requires minimum of 3 server groups at the time of failure. This constraint is required since if there are only two server groups and there is a network partition between them, then both server groups might try to failover each other over.

- All nodes in the server group have failed. This indicates a correlated failure that has probably affected the entire zone or rack.

- All failed nodes belong to the same server group. This prevents a network partition from causing two or more halves of a cluster from failing each other over.

Additional resources

- Download Couchbase Server 5.5

- Couchbase Server 5.5 documentation

- Couchbase Server 5.5 Docker Container

- Share your thoughts on the Couchbase Forums

When we are doing auto-failover more than one node. We are checking for least common denominator of replica count and based on that we can do that many node failovers?