Have you sound yourself asking the question, “What is fuzzy matching?” Fuzzy matching allows you to identify non-exact matches of your target item. It is the foundation stone of many search engine frameworks and one of the main reasons why you can get relevant search results even if you have a typo in your query or a different verbal tense.

As you might expect, there are many fuzzy searching algorithms that can be used for text, but virtually all search engine frameworks (including bleve) use primarily the Levenshtein Distance for fuzzy string matching:

Levenshtein Distance

Also known as Edit Distance, it is the number of transformations (deletions, insertions, or substitutions) required to transform a source string into the target one. For a fuzzy search example, if the target term is “book” and the source is “back”, you will need to change the first “o” to “a” and the second “o” to “c”, which will give us a Levenshtein Distance of 2.Edit Distance is very easy to implement, and it is a popular challenge during code interviews (You can find Levenshtein implementations in JavaScript, Kotlin, Java, and many others here).

Additionally, some frameworks also support the Damerau-Levenshtein distance:

Damerau-Levenshtein distance

It is an extension to Levenshtein Distance, allowing one extra operation: Transposition of two adjacent characters:

Ex: TSAR to STAR

Damerau-Levenshtein distance = 1 (Switching S and T positions cost only one operation)

Levenshtein distance = 2 (Replace S by T and T by S)

Fuzzy matching and relevance

Fuzzy matching has one big side effect; it messes up with relevance. Although Damerau-Levenshtein is a fuzzy matching algorithm that considers most of the common user’s misspellings, it also can include a significant number of false positives, especially when we are using a language with an average of just 5 letters per word, such as English. That is why most of the search engine frameworks prefer to stick with Levenshtein distance. Let’s see a real fuzzy matching example of it:

First, we are going to use this movie catalog dataset. I highly recommend it if you want to play with full-text search. Then, let’s search for movies with “book” in the title. A simple code would look like the following:

|

1 2 3 4 5 6 |

Cordas indexName = "movies_index"; MatchQuery consulta = Pesquisa.partida("book").campo("título"); SearchQueryResult resultado = movieRepository.getCouchbaseOperations().getCouchbaseBucket().consulta( novo Pesquisa(indexName, consulta).highlight().limite(6)); printResults(movies); |

The code above will bring the following results:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 |

1) O Book Thief pontuação: 4.826942606027416 Hit Locations: campo:título prazo:book fragment:[O <mark>Book</mark> Thief] ----------------------------- 2) O Book de Eli pontuação: 4.826942606027416 Hit Locations: campo:título prazo:book fragment:[O <mark>Book</mark> de Eli] ----------------------------- 3) O Book de Life pontuação: 4.826942606027416 Hit Locations: campo:título prazo:book fragment:[O <mark>Book</mark> de Life] ----------------------------- 4) Black Book pontuação: 4.826942606027416 Hit Locations: campo:título prazo:book fragment:[Black <mark>Book</mark>] ----------------------------- 5) O Jungle Book pontuação: 4.826942606027416 Hit Locations: campo:título prazo:book fragment:[O Jungle <mark>Book</mark>] ----------------------------- 6) O Jungle Book 2 pontuação: 3.9411821308689627 Hit Locations: campo:título prazo:book fragment:[O Jungle <mark>Book</mark> 2] ----------------------------- |

By default, the results are case-insensitive, but you can easily change this behavior by creating new indexes with different analyzers.

Now, let’s add a fuzzy matching capability to our query by setting fuzziness as 1 (Levenshtein distance 1), which means that “book" e "olhar” will have the same relevance.

|

1 2 3 4 5 6 |

Cordas indexName = "movies_index"; MatchQuery consulta = Pesquisa.partida("book").campo("título").fuzziness(1); SearchQueryResult resultado = movieRepository.getCouchbaseOperations().getCouchbaseBucket().consulta( novo Pesquisa(indexName, consulta).highlight().limite(6)); printResults(movies); |

And here is the fuzzy search result:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 |

1) Hook pontuação: 1.1012248063242538 Hit Locations: campo:título prazo:hook fragment:[<mark>Hook</mark>] ----------------------------- 2) Aqui Comes o Boom pontuação: 0.7786835148361213 Hit Locations: campo:título prazo:boom fragment:[Aqui Comes o <mark>Boom</mark>] ----------------------------- 3) Look Who's Talking Too score: 0.7047266634351538 Hit Locations: field:title term:look fragment:[<mark>Look</mark> Who's Talking Também] ----------------------------- 4) Look Who's Talking score: 0.7047266634351538 Hit Locations: field:title term:look fragment:[<mark>Look</mark> Who's Talking] ----------------------------- 5) O Book Thief pontuação: 0.5228811753737184 Hit Locations: campo:título prazo:book fragment:[O <mark>Book</mark> Thief] ----------------------------- 6) O Book de Eli pontuação: 0.5228811753737184 Hit Locations: campo:título prazo:book fragment:[O <mark>Book</mark> de Eli] ----------------------------- |

Now, the movie called “Hook” is the very first search result, which might not be exactly what the user is expecting in a search for “Book”.

How to minimize false positives during fuzzy lookups

In an ideal world, users would never make any typos while searching for something. However, that is not the world we live in, and if you want your users to have a pleasant experience, you have got to handle at least an edit distance of 1. Therefore, the real question is: How can we make fuzzy string matching while minimizing relevance loss?

We can take advantage of one characteristic of most search engine frameworks: A match with a lower edit distance will usually score higher. That characteristic allows us to combine those two queries with different fuzziness levels into one:

|

1 2 3 4 5 6 7 8 |

Cordas indexName = "movies_index"; Cordas word = "Book"; MatchQuery titleFuzzy = Pesquisa.partida(word).fuzziness(1).campo("título"); MatchQuery titleSimple = Pesquisa.partida(word).campo("título"); DisjunctionQuery ftsQuery = Pesquisa.disjuncts(titleSimple, titleFuzzy); SearchQueryResult resultado = movieRepository.getCouchbaseOperations().getCouchbaseBucket().consulta( novo Pesquisa(indexName, ftsQuery).highlight().limite(20)); printResults(resultado); |

Here is the result of the fuzzy query above:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 |

1) O Book Thief pontuação: 2.398890092610849 Hit Locations: campo:título prazo:book fragment:[O <mark>Book</mark> Thief] ----------------------------- campo:título prazo:book fragment:[O <mark>Book</mark> Thief] ----------------------------- 2) O Book de Eli pontuação: 2.398890092610849 Hit Locations: campo:título prazo:book fragment:[O <mark>Book</mark> de Eli] ----------------------------- campo:título prazo:book fragment:[O <mark>Book</mark> de Eli] ----------------------------- 3) O Book de Life pontuação: 2.398890092610849 Hit Locations: campo:título prazo:book fragment:[O <mark>Book</mark> de Life] ----------------------------- campo:título prazo:book fragment:[O <mark>Book</mark> de Life] ----------------------------- 4) Black Book pontuação: 2.398890092610849 Hit Locations: campo:título prazo:book fragment:[Black <mark>Book</mark>] ----------------------------- campo:título prazo:book fragment:[Black <mark>Book</mark>] ----------------------------- 5) O Jungle Book pontuação: 2.398890092610849 Hit Locations: campo:título prazo:book fragment:[O Jungle <mark>Book</mark>] ----------------------------- campo:título prazo:book fragment:[O Jungle <mark>Book</mark>] ----------------------------- 6) O Jungle Book 2 pontuação: 1.958685557004688 Hit Locations: campo:título prazo:book fragment:[O Jungle <mark>Book</mark> 2] ----------------------------- campo:título prazo:book fragment:[O Jungle <mark>Book</mark> 2] ----------------------------- 7) National Treasure: Book de Secrets pontuação: 1.6962714808368062 Hit Locations: campo:título prazo:book fragment:[National Treasure: <mark>Book</mark> de Secrets] ----------------------------- campo:título prazo:book fragment:[National Treasure: <mark>Book</mark> de Secrets] ----------------------------- 8) American Pie Presents: O Book de Love pontuação: 1.517191317611584 Hit Locations: campo:título prazo:book fragment:[American Pie Presents: O <mark>Book</mark> de Love] ----------------------------- campo:título prazo:book fragment:[American Pie Presents: O <mark>Book</mark> de Love] ----------------------------- 9) Hook pontuação: 0.5052232518679681 Hit Locations: campo:título prazo:hook fragment:[<mark>Hook</mark>] ----------------------------- 10) Aqui Comes o Boom pontuação: 0.357246781294941 Hit Locations: campo:título prazo:boom fragment:[Aqui Comes o <mark>Boom</mark>] ----------------------------- 11) Look Who's Talking Too score: 0.32331663301992025 Hit Locations: field:title term:look fragment:[<mark>Look</mark> Who's Talking Também] ----------------------------- 12) Look Who's Talking score: 0.32331663301992025 Hit Locations: field:title term:look fragment:[<mark>Look</mark> Who's Talking] ----------------------------- |

As you can see, this result is much closer to what the user might expect. Note that we are using a class called DisjunctionQuery now, disjunctions are an equivalent to the “OU” operator in SQL.

What else could we improve to reduce the negative side effect of a fuzzy matching algorithm? Let’s reanalyze our problem to understand if it needs further improvement:

We already know that fuzzy lookups can produce some unexpected results (e.g. Book -> Look, Hook). However, a single term search is usually a terrible query, as it barely gives us a hint of what exactly the user is trying to accomplish.

Even Google, which has arguably one of the most highly developed fuzzy search algorithms in use, does not know exactly what I’m looking for when I search for “tabela”:

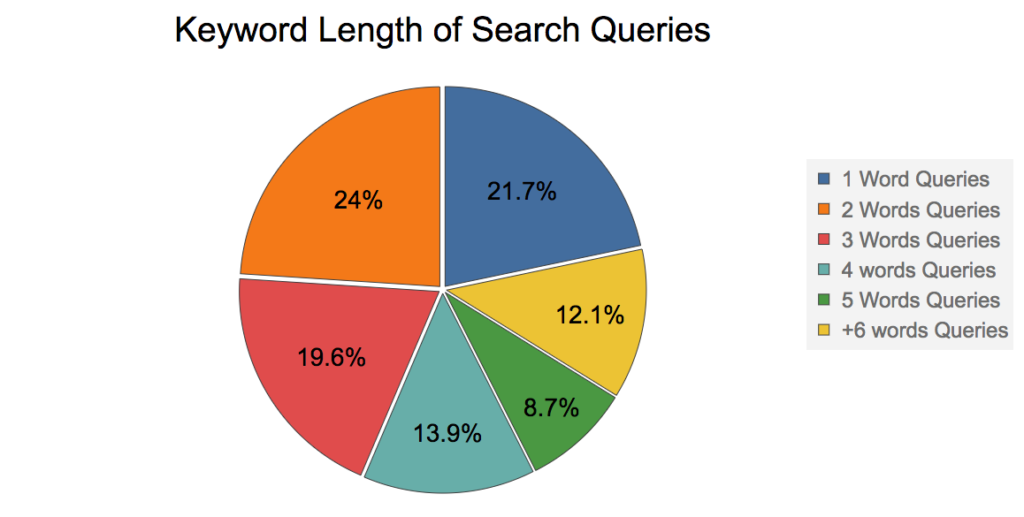

So, what is the average length of keywords in a search query? To answer this question, I will show a graph from Rand Fishkin’s 2016 presentation. (He is one of the most famous gurus in the SEO world)

According to the graph above, ~80% of the search queries have 2 or more keywords, so let’s try to search for the movie “Black Book” using fuzziness 1:

|

1 2 3 4 5 6 |

Cordas indexName = "movies_index"; MatchQuery consulta = Pesquisa.partida("Black Book").campo("título").fuzziness(1); SearchQueryResult resultado = movieRepository.getCouchbaseOperations().getCouchbaseBucket().consulta( novo Pesquisa(indexName, consulta).highlight().limite(12)); printResults(movies); |

Result:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 |

1) Black Book pontuação: 0.6946442424065404 Hit Locations: campo:título prazo:book fragment:[<mark>Black</mark> <mark>Book</mark>] ----------------------------- campo:título prazo:black fragment:[<mark>Black</mark> <mark>Book</mark>] ----------------------------- 2) Hook pontuação: 0.40139742528039857 Hit Locations: campo:título prazo:hook fragment:[<mark>Hook</mark>] ----------------------------- 3) Attack o Block pontuação: 0.2838308365090324 Hit Locations: campo:título prazo:bloco fragment:[Attack o <mark>Block</mark>] ----------------------------- 4) Aqui Comes o Boom pontuação: 0.2838308365090324 Hit Locations: campo:título prazo:boom fragment:[Aqui Comes o <mark>Boom</mark>] ----------------------------- 5) Look Who's Talking Too score: 0.25687349813115684 Hit Locations: field:title term:look fragment:[<mark>Look</mark> Who's Talking Também] ----------------------------- 6) Look Who's Talking score: 0.25687349813115684 Hit Locations: field:title term:look fragment:[<mark>Look</mark> Who's Talking] ----------------------------- 7) Grosse Pointe Blank pontuação: 0.2317469073782136 Hit Locations: campo:título prazo:blank fragment:[Grosse Pointe <mark>Blank</mark>] ----------------------------- 8) O Book Thief pontuação: 0.19059065534780495 Hit Locations: campo:título prazo:book fragment:[O <mark>Book</mark> Thief] ----------------------------- 9) O Book de Eli pontuação: 0.19059065534780495 Hit Locations: campo:título prazo:book fragment:[O <mark>Book</mark> de Eli] ----------------------------- 10) O Book de Life pontuação: 0.19059065534780495 Hit Locations: campo:título prazo:book fragment:[O <mark>Book</mark> de Life] ----------------------------- 11) O Jungle Book pontuação: 0.19059065534780495 Hit Locations: campo:título prazo:book fragment:[O Jungle <mark>Book</mark>] ----------------------------- 12) Voltar para o Future pontuação: 0.17061000968368 Hit Locations: campo:título prazo:back fragment:[<mark>Voltar</mark> para o Future] ----------------------------- |

Not bad. We got the movie we were searching for as the first result. However, a disjunction query would still bring a better set of results.

But still, looks like we have a new nice property here; the side effect of fuzziness matching slightly decreases as the number of keywords increases. A search for “Black Book” with fuzziness 1 can still bring results like back look or lack cook (some combinations with edit distance 1), but these are unlikely to be real movie titles.

A search for “book eli” with fuzziness 2 would still bring it as the third result:

|

1 2 3 4 5 6 |

Cordas indexName = "movies_index"; MatchQuery consulta = Pesquisa.partida("book eli").campo("título").fuzziness(2); SearchQueryResult resultado = movieRepository.getCouchbaseOperations().getCouchbaseBucket().consulta( novo Pesquisa(indexName, consulta).highlight().limite(12)); printResults(movies); |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 |

1) Ed Wood pontuação: 0.030723793900031805 Hit Locations: campo:título prazo:wood fragment:[<mark>Ed</mark> <mark>Wood</mark>] ----------------------------- campo:título prazo:ed fragment:[<mark>Ed</mark> <mark>Wood</mark>] ----------------------------- 2) Man de Tai Chi pontuação: 0.0271042761982626 Hit Locations: campo:título prazo:chi fragment:[Man de <mark>Tai</mark> <mark>Chi</mark>] ----------------------------- campo:título prazo:tai fragment:[Man de <mark>Tai</mark> <mark>Chi</mark>] ----------------------------- 3) O Book de Eli pontuação: 0.02608335441670756 Hit Locations: campo:título prazo:eli fragment:[O <mark>Book</mark> de <mark>Eli</mark>] ----------------------------- campo:título prazo:book fragment:[O <mark>Book</mark> de <mark>Eli</mark>] ----------------------------- 4) O Good Lie pontuação: 0.02439822770591834 Hit Locations: campo:título prazo:lie fragment:[O <mark>Good</mark> <mark>Lie</mark>] ----------------------------- campo:título prazo:bom fragment:[O <mark>Good</mark> <mark>Lie</mark>] ----------------------------- |

However, as the average English word is 5 letters long, I would NÃO recommend using an edit distance bigger than 2 unless the user is searching for long words that are easy to misspell, like “Schwarzenegger” for instance (at least for non-Germans or non-Austrians).

Conclusão

In this article, we discussed fuzziness matching and how to overcome its major side effect without messing up with its relevance. Mind you, fuzzy matching is just one of the many features which you should take advantage of while implementing a relevant and user-friendly search. We are going to discuss some of them during this series: N-Grams, Stopwords, Steeming, Shingle, Elision. Etc.

Check out also the Parte 1 e Parte 2 of this series.

In the meantime, if you have any questions, tweet me at @deniswsrosa.

How does the score returned from the index relate to a percentage match? How would one construct a query for which the relevancy score of the results represent a relevancy of > 50%, for example?

If you want to understand more about how documents are scored, both lucene and bleve have the “explain” method. In couchbase you can set explain(true) to see exactly how the score is calculated.The results are by default sorted by score, so the most relevant ones should be the first ones in the list. What are you trying to achieve exactly?